Method

1. Modeling Universal Information Extraction (UIE)

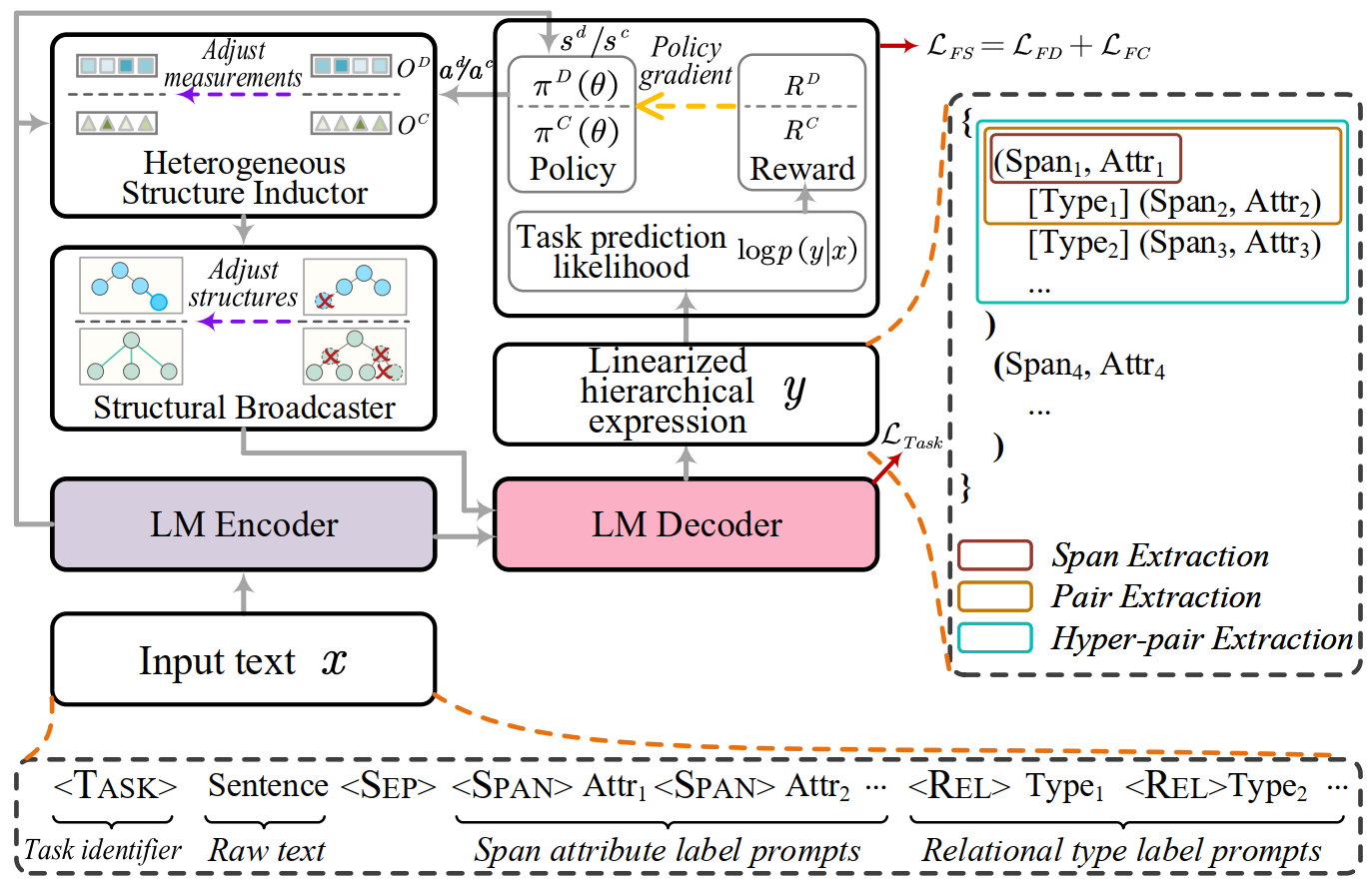

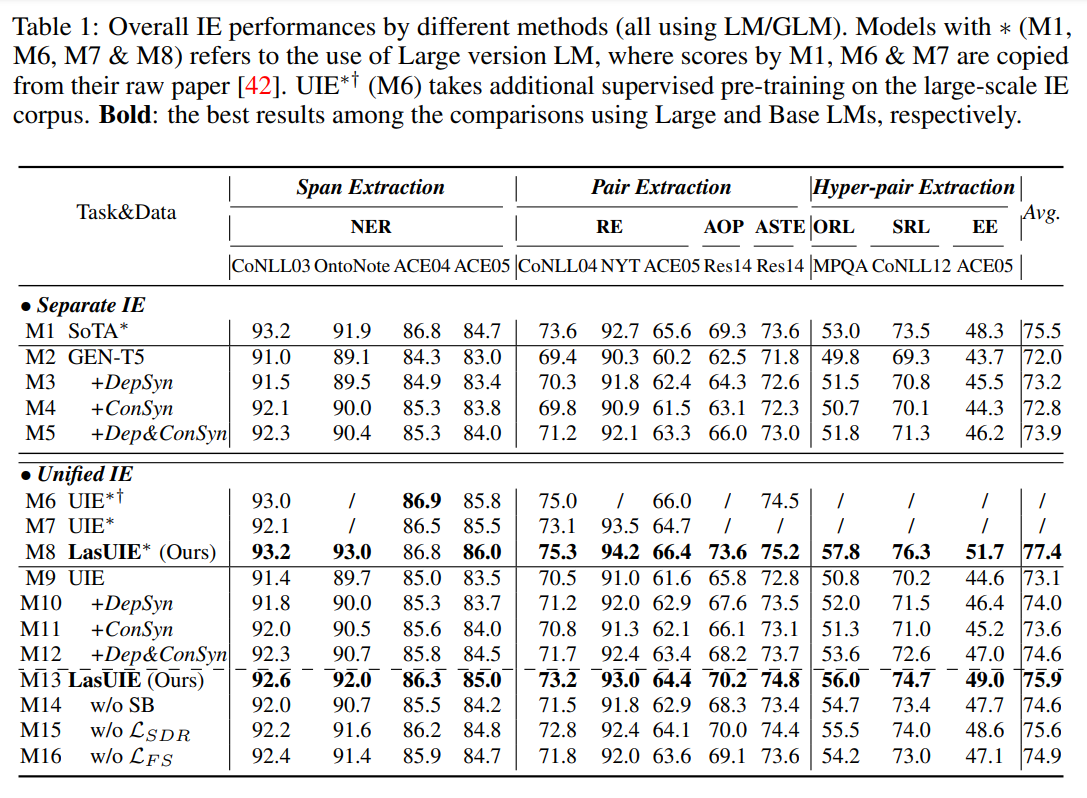

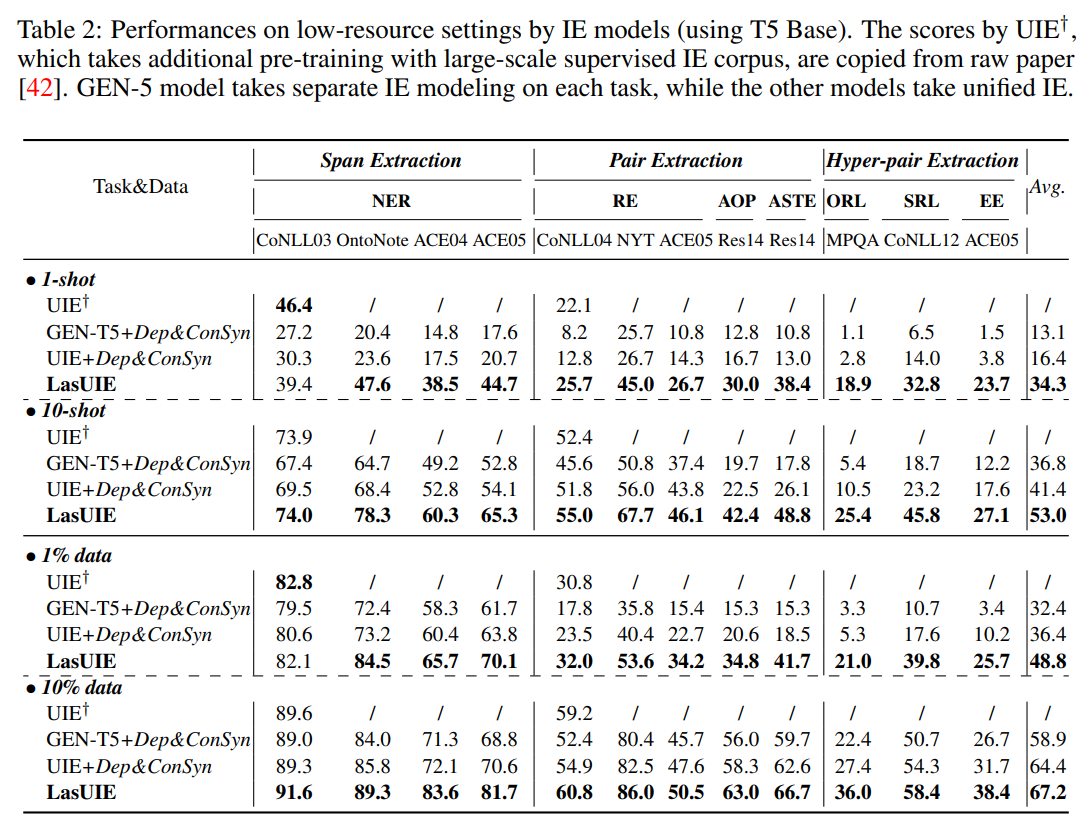

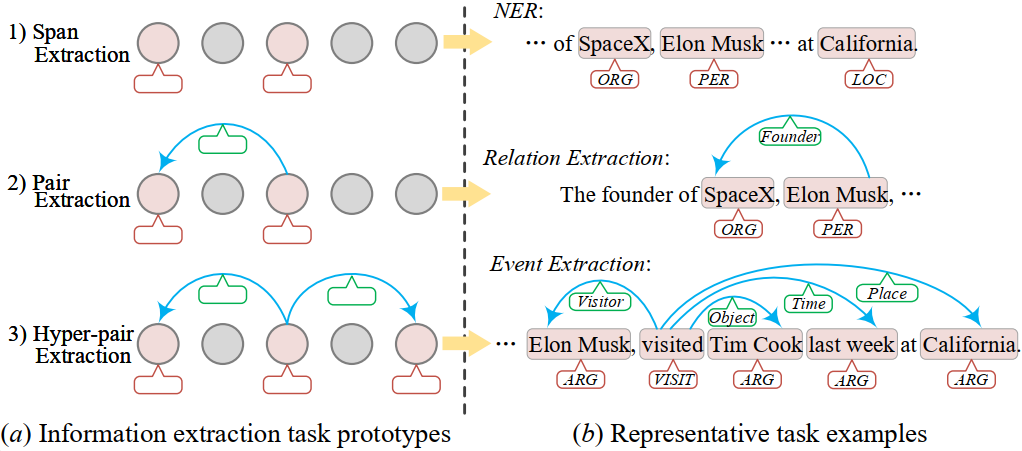

UIE has been proposed to unify all information extraction tasks in NLP community, which converts the structure prediction of IE tasks universally into the sequence prediction via generative LMs. All IE jobs essentially revolves around predicting two key elements: <mention spans> or/and their <semantic relations>. In this project, we thus reduce all the IE tasks into three prototypes: span extraction, pair extraction and hyper-pair extraction:

-

I) Span Extraction, e.g.,

- • named entity recognition (NER)

- • aspect-based sentiment analysis (ABSA)

- • aspect-term extraction (ATE)

-

II) Pair Extraction, e.g.,

- • relation extraction (RE)

- • aspect-opinion pair extraction (AOP)

- • aspect-based sentiment triplet extraction (ASTE)

-

III) Hyper-pair Extraction, e.g.,

- • event extraction (EE)

- • semantic role labeling (SRL)

- • opinion role labeling (ORL)

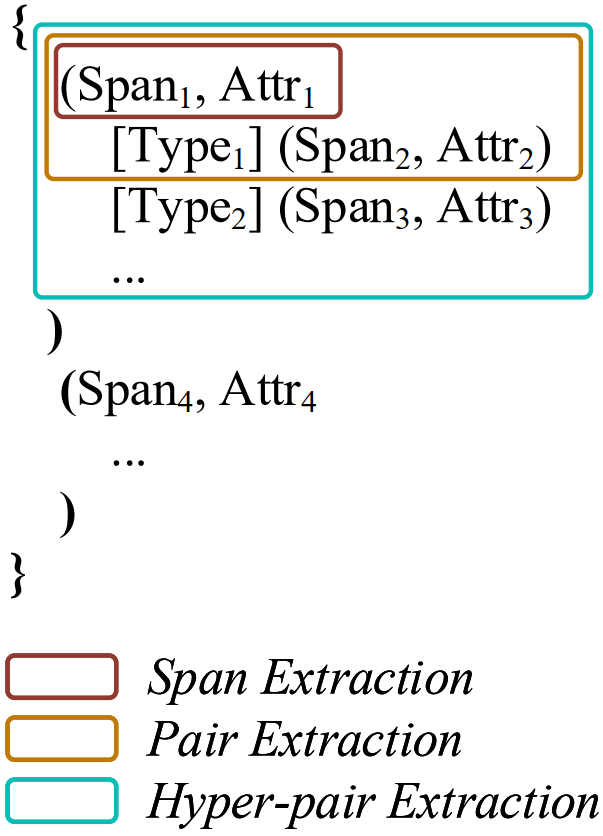

Under this scheme, mention spans are described with <Span> terms and the corresponding <Span Attribute> labels; semantic relations are straightforwardly denoted with <Relation> labels. And all the IE structures are cast into a sequential representation: Linearized Hierarchical Expression (LHE). For example,

-

• in span extraction:

- • { ( Span1 , Attr1 ) , ... , ( Spani , Attri ) , ... }

-

• in span extraction:

- • { ... , ( Spani , Attri [ Relk ] Spanj , Attrj ) , ... }

-

• in span extraction:

- • { ... , ( Spani , Attri [ Relk ] Spanj , Attrj [ Relm ] Spann , Attrn , ... ) , ... }

2. UIE with Structure-aware Generative Language Model

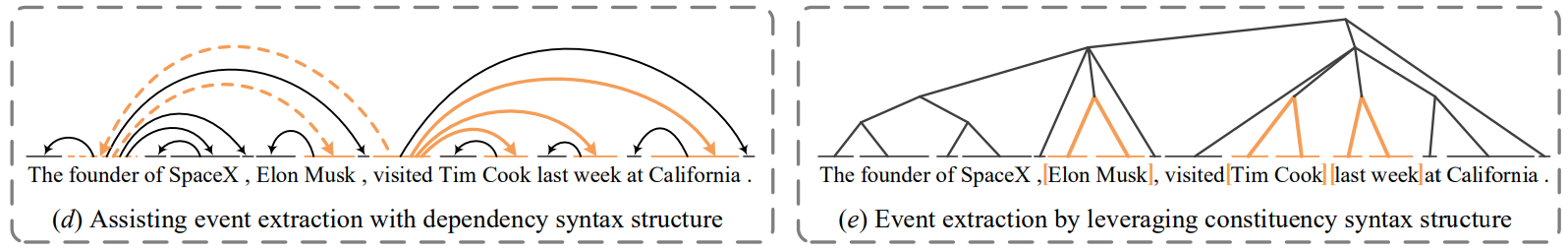

As cast above, UIE has two key common challenges of IEs:

-

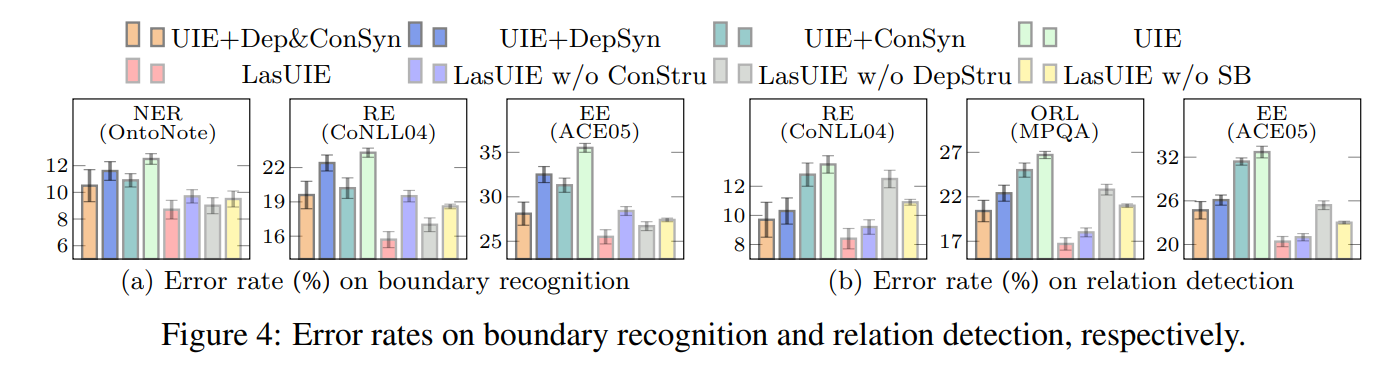

• Boundary Identification of each span terms (for UIE-element-II: span extraction).

-

• Long-range Dependence between different span terms (for UIE-element-I: relation extraction);

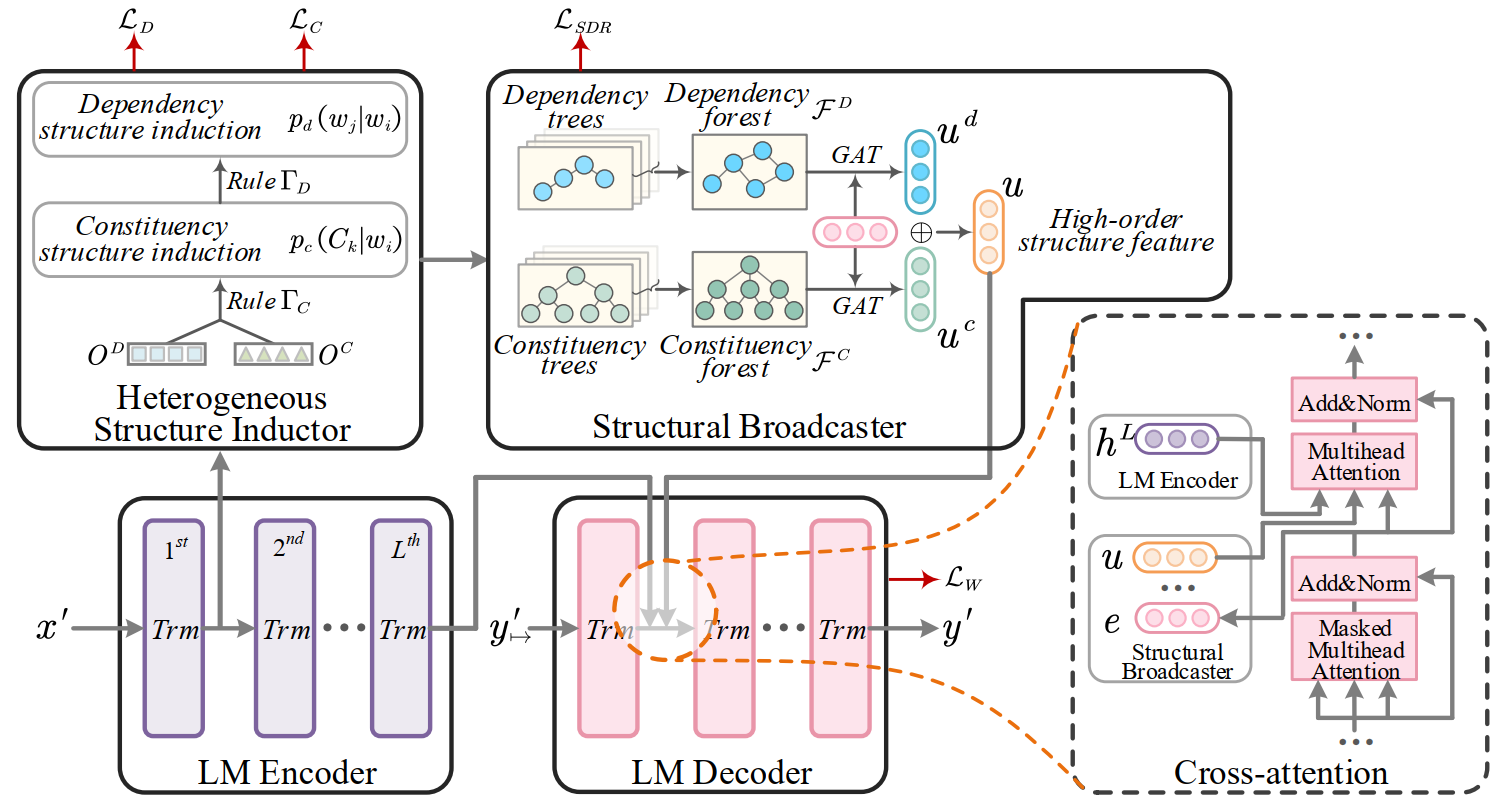

We thus propose addressing the two challenges by modeling both the syntactic dependency structure and constituency structure, where the constituency syntax mostly benefits the first challenge; the dependency structure well aids the second challenge.

To implement the above idea, we propose learning a Latent Adaptive Structure-aware Generative Language Model for UIE, aka, LasUIE.

-

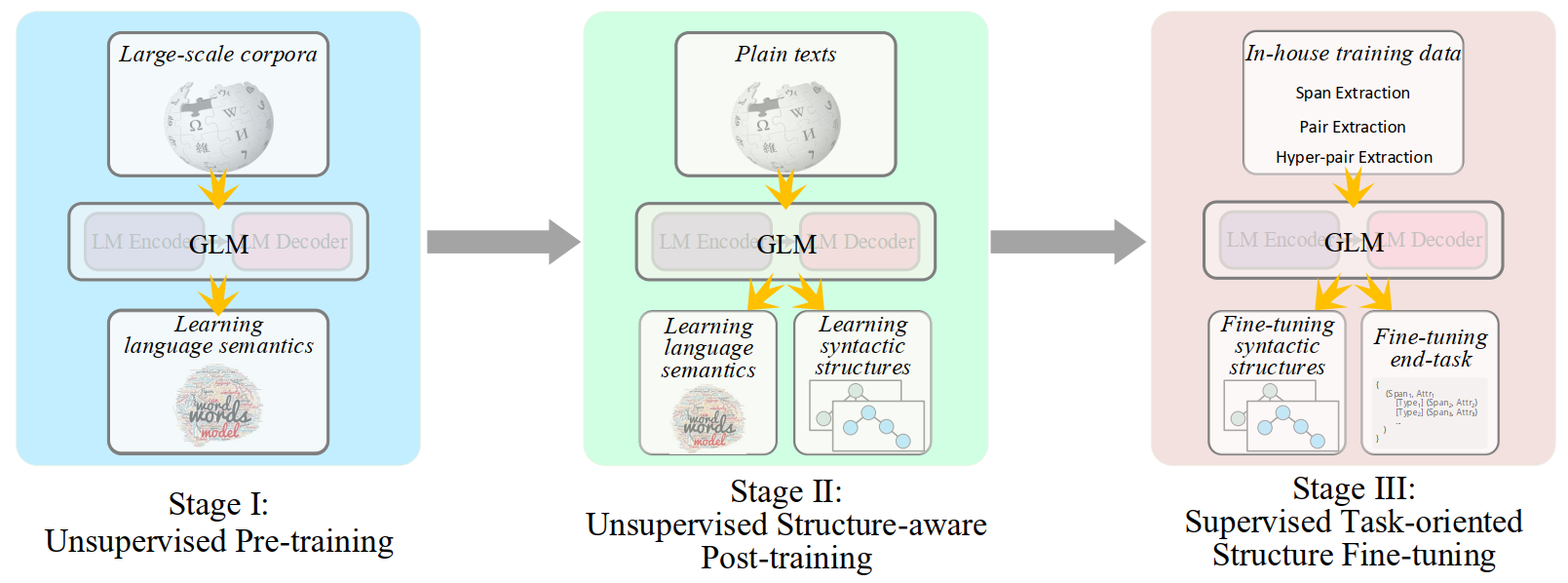

• Stage-I: unsupervised generic pre-training :

- generally using an off-the-shelf well-trained generative LM (GLM), e.g., BART, T5.

-

• Stage-II: unsupervised structure-aware post-training :

- a newly introduced procedure in this project, inserted between the pre-training and fine-tuning stages for structure learning.

-

• Stage-III: supervised task-oriented structure fine-tuning :

- a newly introduced procedure in this project, along with the task-specific finetuning.

2.1. Unsupervised structure-aware post-training

A Heterogeneous structure inductor (HSI) module is used to unsupervisedly enrich the backbone GLM with sufficient structural knowledge, reinforcing the awareness of linguistic syntax.

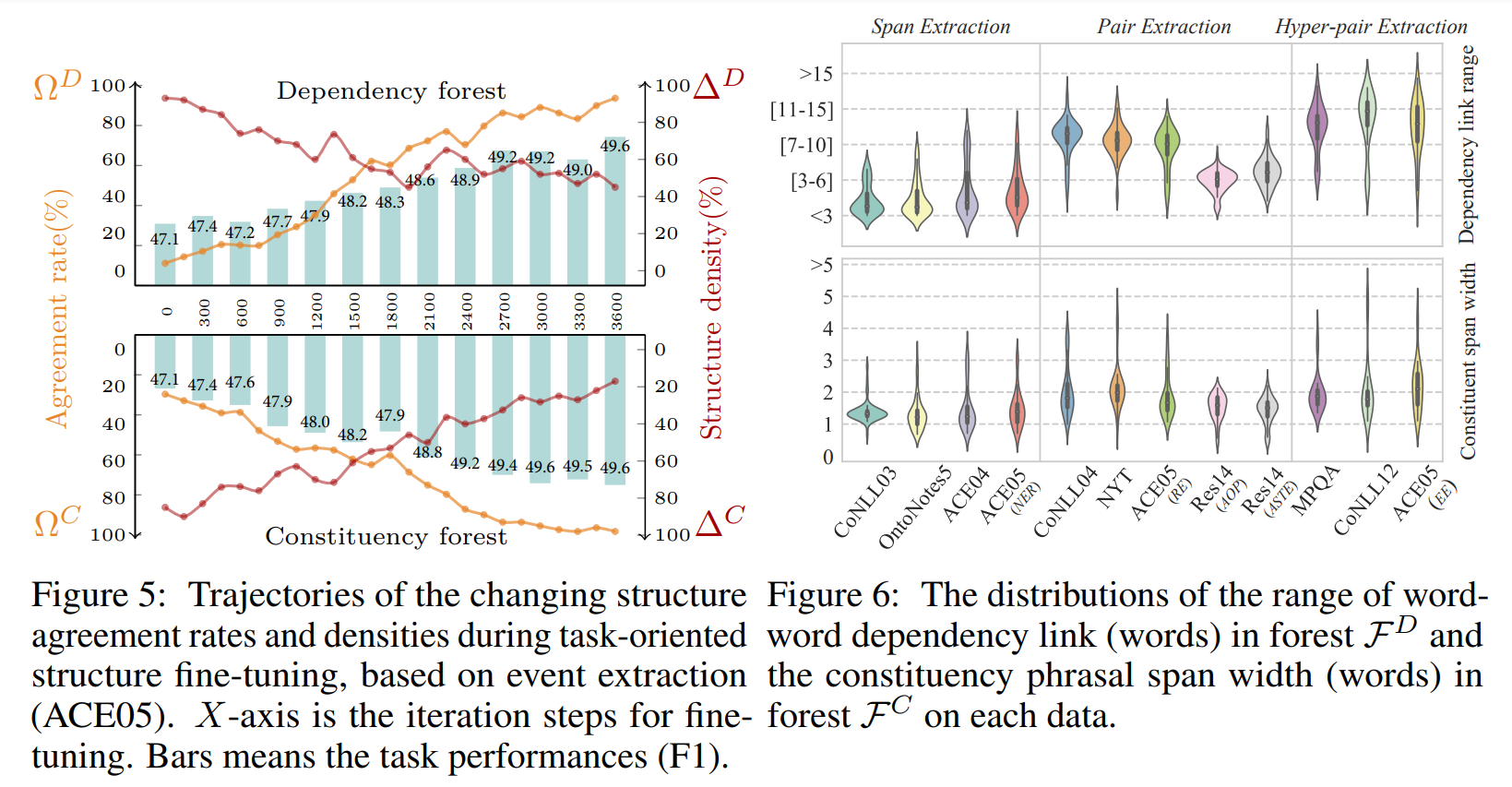

2.2. Supervised task-oriented structure fine-tuning

Further adjusting (finetune) the syntactic attributes within the GLM with stochastic policy gradient algorithm by directly taking the feedback of end task performance, such that the learned structural features are most coincident with the end task needs.